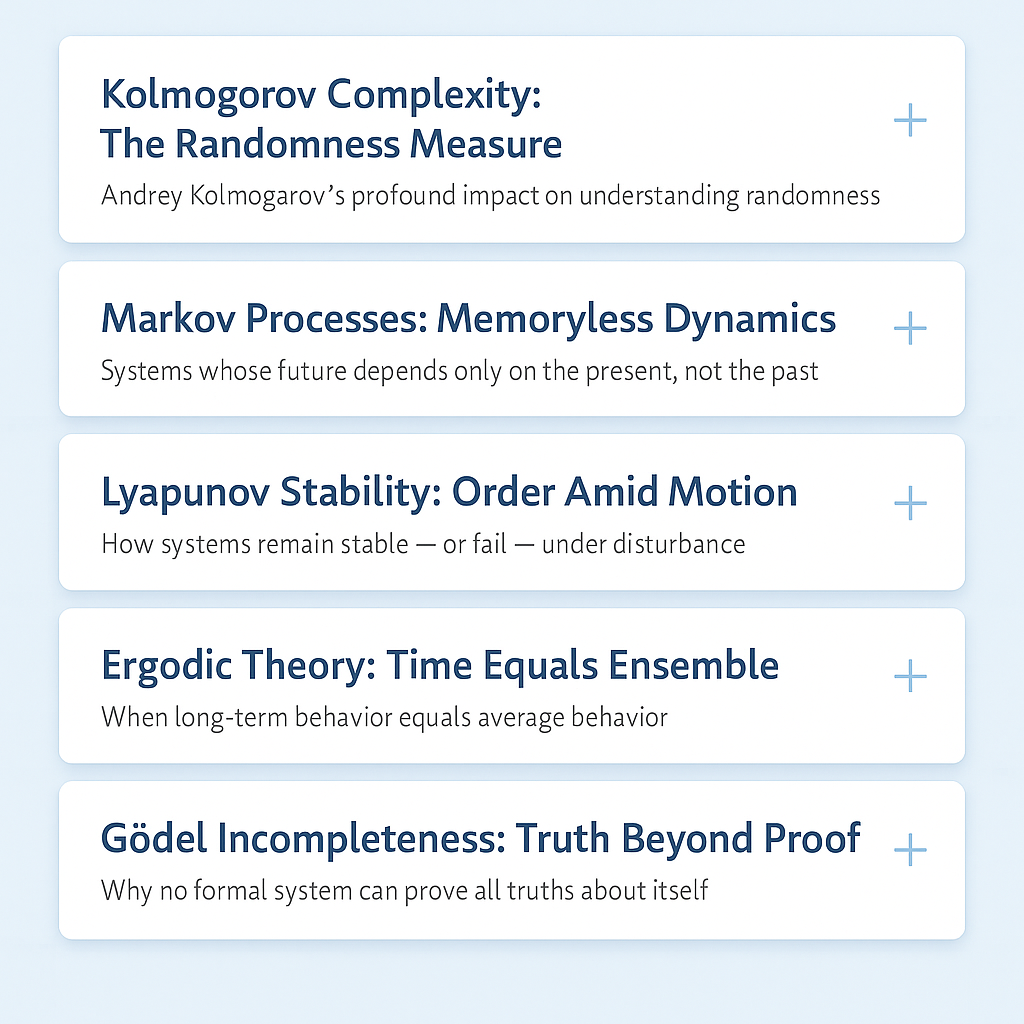

Markov Processes: Memoryless Dynamics

Systems whose future depends only on the present, not the past.

What is a Markov Process?

A Markov process is a stochastic system in which the probability of transitioning to the next state depends only on the current state. This property is known as memorylessness. Past history is irrelevant once the present state is known.

Key Ideas and Significance

- Markov Property: Future state depends only on the current state.

- Transition Structure: Probabilities of moving between states.

- Stationary Behavior: Long-term equilibrium distributions.

- Applications: Random processes, thermodynamics, learning systems.

Explore Adjacent Ideas

Lyapunov Stability: Order Amid Motion

How systems remain stable — or fail — under disturbance.

What is Lyapunov Stability?

Lyapunov stability concerns the behavior of dynamical systems near equilibrium points. A system is stable if small perturbations produce only small deviations over time. Lyapunov introduced energy-like functions to assess stability without explicitly solving the governing equations.

Key Ideas and Significance

- Lyapunov Function: Scalar measure of system energy or deviation.

- Stability Classes: Stable, asymptotically stable, unstable.

- Local vs Global: Neighborhood behavior versus full state space.

- Applications: Control, robotics, chaos, physical systems.

Explore Adjacent Ideas

Kolmogorov Complexity: The Randomness Measure

Click to learn about Andrey Kolmogorov’s profound impact on understanding randomness.

What is Kolmogorov Complexity?

Kolmogorov complexity, also known as algorithmic complexity, is a measure of the computational resources required to describe an object. It is defined as the length of the shortest possible computer program that produces the object as output. Objects with low Kolmogorov complexity are structured and compressible, while objects with high Kolmogorov complexity are considered random.

Key Ideas and Significance

- Incompressibility: Strings whose shortest description is as long as the string itself.

- Objective Randomness: A definition of randomness independent of statistics.

- Incomputability: No algorithm can compute Kolmogorov complexity for all strings.

- Applications: Information theory, cryptography, computer science, biology.

Explore Adjacent Ideas

Ergodic Theory: Time Equals Ensemble

When long-term behavior equals average behavior.

What is Ergodic Theory?

Ergodic theory studies dynamical systems whose long-term time averages coincide with their space (ensemble) averages. In an ergodic system, observing one trajectory for a long time is equivalent to observing the entire system statistically.

Key Ideas and Significance

- Ergodic Hypothesis: Time averages equal ensemble averages.

- Invariant Measure: A probability measure preserved by the dynamics.

- Mixing: Strong statistical independence over time.

- Applications: Statistical mechanics, thermodynamics, chaos, number theory.

Explore Adjacent Ideas

Gödel Incompleteness: Truth Beyond Proof

Why no formal system can prove all truths about itself.

What are Gödel’s Incompleteness Theorems?

Gödel’s incompleteness theorems show that any sufficiently powerful, consistent formal system capable of arithmetic contains true statements that cannot be proven within the system itself. Completeness and consistency cannot coexist.

Key Ideas and Significance

- First Theorem: There exist true but unprovable statements.

- Second Theorem: A system cannot prove its own consistency.

- Self-Reference: Encoded via arithmetic and diagonalization.

- Implications: Limits of formal logic, computation, and AI.